SP6’s Quick-Tips for Splunk Query Optimization

Practice good habits when writing Splunk queries and keep your Splunk searches as efficient as possible.

I think it happens to every Splunker as it happened to me. Yes, it went a bit to my head and I got to thinking I’m the big data big shot – mining value out of every piece of data I came across in a jiffy. You want what? Yes, I can do that in a second! Everyone is happy and it seems like the pride and praise will only grow… until I came upon that day. That day when what was big data is turned into huge data by stepping from GB’s to TB’s indexed. Coolly, I worked my usual SPL magic … but the environment was not having it and neither were the users – my Splunk query was slow, and results sputtered onto the screen many minutes later. What to do, what to do? Before telling the customer they’re going to need to level up on their hardware game I better make sure I’m squeezing every last drop of performance that the Splunk environment has to give. That’s when I gave query efficiency a serious look and these are the lessons learned since.

1. Slice and dice your data as early as possible

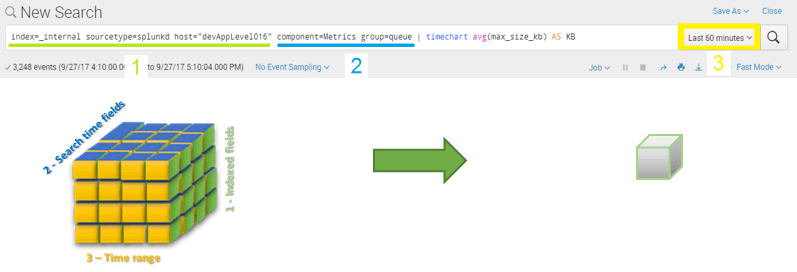

The lowest hanging fruit in this tree is making sure you only retrieve what you will use – anything more and you’re wasting resources. Let’s start with the obvious: the time range picker. Splunk will know what data buckets to look at based on what your query’s time range tells it. When you reduce the time range you’re allowing Splunk to quickly discard irrelevant chunks of data right out of the gate. Extra points if you’re already familiar with the “earliest”, “latest” and relative time modifiers.

Another powerful tool is the default fields (host, index, source, sourcetype, etc.). Since these fields are created as part of the metadata generated when the data is indexed, they are readily available to be used as filters without having to extract the fields first. As you’ve probably seen, it’s common practice to start your Splunk query by specifying index and sourcetype at the very least. Hopefully, you’ve set values to the default fields that aid you in executing a dense search versus ending up with a “needle in a haystack” scenario. This is why it’s a good idea to logically separate data via indexes that will end up serving different requirements.

Finally, we have the fields extracted at search time. Although they will not perform as fast as when filtering with default fields, they are the next tools in line. What fields you end up using are, of course, dependent on what type of data you’re working with, but common candidates to start off are fields that identify the event type, format, log level, component, etc. Using these filters now means that the commands that come later do not needlessly process events that don’t contain what we’re looking for.

2. Streaming vs. non-streaming commands

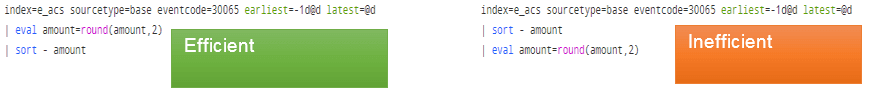

You’ll want to place your streaming commands before your non-streaming commands. The difference between the two types of commands is that streaming commands run on the indexers because they can be applied on a subset of your data while non-streaming commands generally need the complete set of results before they execute. If you have non-streaming commands early in your query, your data set gets sent to the search head where your search is executing and prevents the indexers from doing as much of the heavy lifting as possible.

A good example of a streaming command is the eval command. Sort is a non-streaming command. Why? When Splunk executes eval it only needs access to the fields in the event being processed to come up with an answer. This means that the indexer pulling up the events from disk can execute eval by itself. Now consider the sort command on the other hand. It needs to have all of the events ready so it can start comparing them with each other to sort them and since the indexer in a cluster does not have the entire data set, it sends what it has to the search head and that’s where the search continues executing. Ideally, you want the indexers to execute as much of the work as possible.

3. Filtering using lookup or subquery

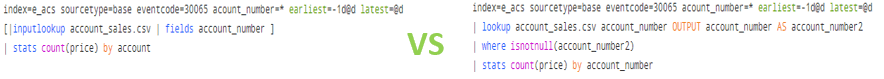

Sooner or later you’ll encounter a scenario where you need to filter events based on external criteria. Say you have a list of accounts that you’re interested in and for the sake of efficiency you would like to discard the rest of the accounts from your query’s data set as soon as possible. There are two ways you can achieve this. One is including a subquery that contains the list of accounts of interest and the other is storing this list in a csv file so that you can use the lookup command to find out if it’s in the list or not.

So consider the differences between these two methods of filtering:

You’ll hear countless times about how it’s best to avoid subqueries and in most cases that is correct, especially so when using join or append. In this case, it’s more efficient to use the subquery to pull data from a predefined lookup to use it to filter your results. This is because Splunk will use bloom filters to quickly determine if a bucket has the events with the account id’s you need or not, whereas the lookup command would still need the entire bucket inspected before you can filter it out. The downside to the subquery approach is that the subquery evaluates to a huge OR list of accounts to filter for and this in turn tremendously bloats the text of the query, but this is still preferable over the other option of using lookup.

4. Know your environment

Finally, it’s useful to know what type of storage the events are stored in and what the retention policy is. In large environments, you’ll come across different tiers of hardware used for different indexes or buckets. For example, you might have hot and warm buckets storing a week’s worth of data on faster Solid State Drives (SSD’s) but older data on conventional storage. Or you could have mission-critical indexes being stored on a fast SAN. It’s useful to have this information in mind so that you can design your query to avoid needlessly searching an index on slow hardware or at the very least, warning your clients that what they’re asking for will be inherently slow no matter what magic you wield.

Summary

There is much more that goes into the speed of your queries than how much data you’re searching and how many indexers you have. Practice good habits when writing queries and keep your searches as efficient as possible. For more search efficiency tips, assistance speeding up notoriously slow queries, or help with anything Splunk, consider reaching out to Aditum Splunk Professional Services consultants. Splunk Professional Services are experts at maximizing the performance of your queries and Splunk environment overall.

About SP6

SP6 is a Splunk consulting firm focused on Splunk professional services including Splunk deployment, ongoing Splunk administration, and Splunk development. SP6 has a separate division that also offers Splunk recruitment and the placement of Splunk professionals into direct-hire (FTE) roles for those companies that may require assistance with acquiring their own full-time staff, given the challenge that currently exists in the market today.