As server clusters grow and gain more and more hosts, they become harder to manage. I believe this is something like the 8th law of thermodynamics or something but don’t quote me on this. My current multi-site Splunk clustered environment is 4 sites, 360 indexers, 4 cluster masters, 6 deployment servers, 10 search heads, 2 license masters, and a partridge in a pear tree. I’m sure you can assume it’s no easy task to manage such a large cluster of Splunk infrastructure.

Enter: Splunk configuration management and remote execution frameworks.

You may have heard of some of these, fabric, ansible, chef, puppet, Saltstack, etc. They are all good choices, but I’ve found that Saltstack and fabric combined suit my needs the best. Fabric is fantastic for bootstrapping Saltstack, and Saltstack takes me from a bare OS to a fully functional clustered Splunk environment in a matter of minutes.

This article will cover using fabric to bootstrap Saltstack, and then using Saltstack to fully deploy a multisite cluster using Baseconfigs that you can get from Splunk. There is a corresponding Splunk usergroups talk on YouTube, as well as a PowerPoint slide deck that accompanies this article. This article assumes you have a base understanding of how Splunk works, and the ports needed to be opened for clustering to function, and the ports needed for Splunk to function.

Firewall rules needed outside of typical Splunk ports:

- TCP/4505, TCP/4506 from the minions to the salt-master

- TCP/9996 between all indexers (for index replication)

Bootstrapping Saltstack with Fabric

Fabric (fabfile.org), is a python framework made to make interfacing with the Paramiko SSH library easier and is solely made for remote execution. It is an agentless library for python that only needs remote hosts running openSSH/SSHD to be able to connect and manipulate any files on the remote hosts. It supports serial or parallel execution and is very easy to learn. If you are on Mac OSX you can install it via homebrew with `brew install fabric` or on all systems, it can be installed via pip with `pip install fabric. On Debian based systems it can also be acquired with apt, (apt-get install fabric), and lastly on windows through ActivePython with `pypm install fabric`

Once you have fabric installed you can utilize it to bootstrap all of your remote systems for Saltstack. I have a Git repository set up with some basic fabric definitions that you can use to set up your systems. Feel free to submit a pull or a fork request, and submit your own definitions upstream if you feel so inclined, or just clone them and use them as needed.

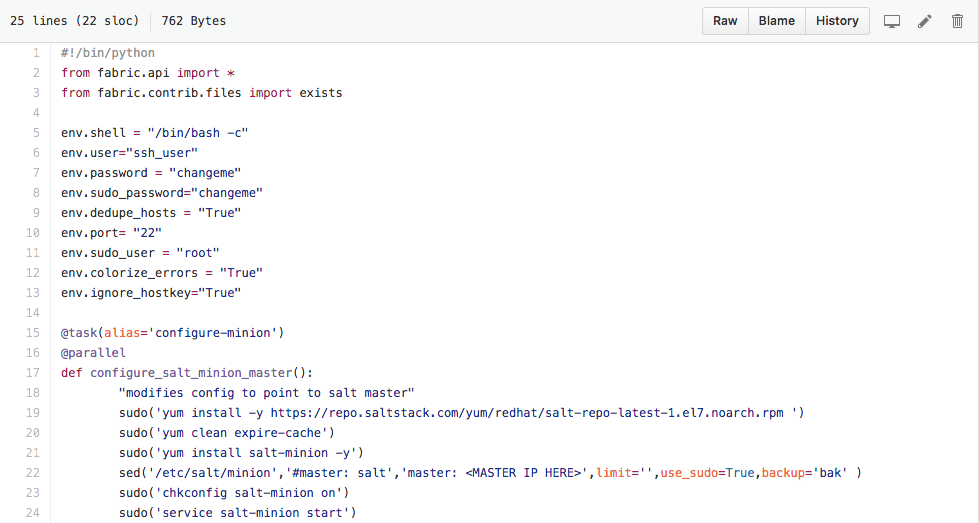

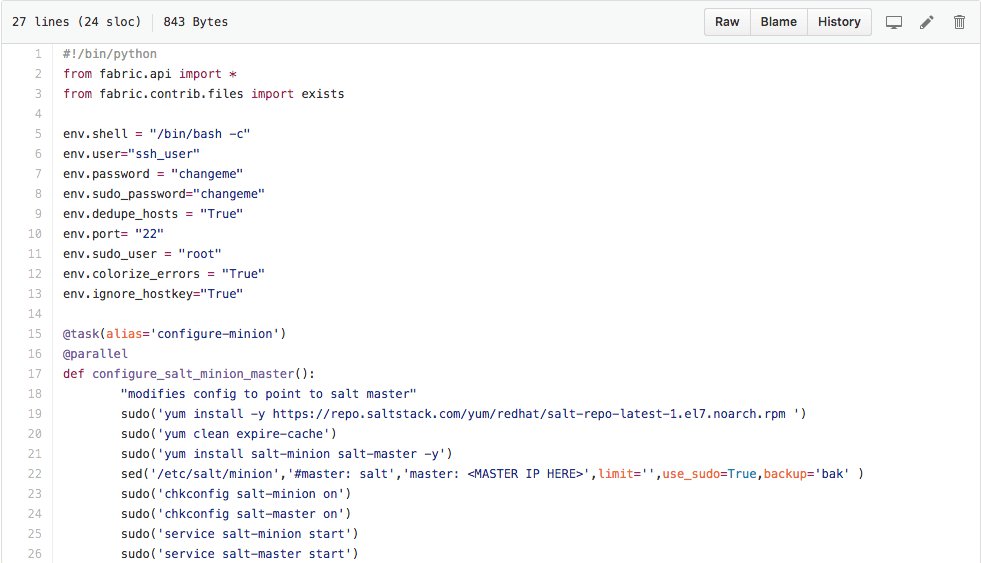

Here are the definitions we are interested in for our purposes:

With these 2 definitions we can configure every sysV based server in our environment for Saltstack.

Using fabric to install Saltstack:

Modify the 2 definitions above to have your servers’ users, passwords, and relevant IP information where needed.

** DO NOT COMMIT THESE FILES WITH THESE CHANGES BACK TO MY REPO. I DO NOT WANT YOUR PASSWORDS. **

NOTE: If you have knowledge of python you can create a python list with all of your servers’ hostnames or IP addresses and store the lists under the fabric environmental variable ‘env.roledefs’ and then access those roles with the –R switch for the fab command. I will not be covering that in this document, however.

Once you have modified the 2 definitions to contain the proper user and password and IP information, we can get started actually configuring our servers. This is done as follows:

#To configure the salt master first we will need to use the following command

user@server > fab –c install_configure_salt_master.py –H $MASTER_IP configure_salt_minion_master

#use the following to configure your salt minions

user@server > fab –c install_configure_salt_master.py –H minion1,minion2,minion3 configure-minionOnce this has finished executing, you will need to accept the salt-minion keys on your salt-master. You can do this with salt-key –A, if you want to verify the minions connecting first you can use salt-key –L. Once you have accepted all of the AES keys for the salt minions, you are officially bootstrapped, and the real fun starts. Be advised that you can use an unlimited number of hosts in the –H hostlist, however, you CAN overwhelm your local machine, if you are configuring more than around 20 remote devices at a time, you will want to edit the @parallel decorator to something like @parallel(pool_size=20) to ensure you don’t overwhelm your local box or internet connection.

Setting up the Salt base environment:

You will need to specify in your /etc/salt/master file the location of where you want to create your salt states. By default, this is done in /srv, I find that to be just fine for my purposes. If you want to run as a non-privileged user, then you may want to use /opt/salt and /opt/pillar.

To get you started quickly, I have assembled a series of salt states using Splunk’s baseconfigs. To save a lot of headaches, git clone the Saltstack states repo from my Github and modify the files to fit your Org. You will want to modify ALL of the configs to fit your needs, as a majority of the configs here are using dummy data if you just apply the highstate from this it WILL break your servers Splunk installations.

Once you have decided on the location for your salt states, you will need to put all of your Splunk apps in the Splunk-deployment-apps directory. Please note: if you choose to use a location other than /srv/salt, you will need to update /etc/salt/master to reflect the new location.

Testing connectivity and targeting minions:

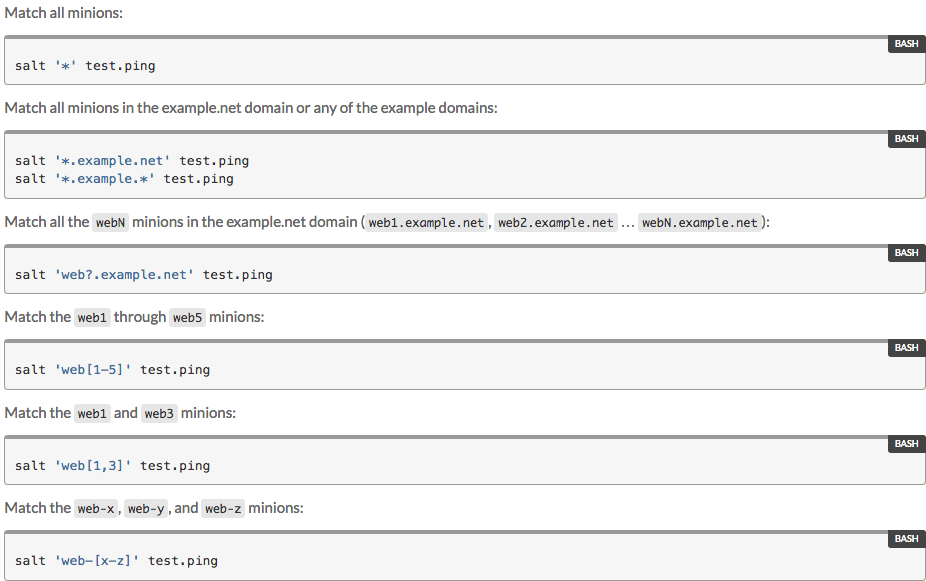

To test connectivity between our master and the salt-minions, we can use the following command “salt ‘*’ test.ping” this command will target all of our minions through the use of the ‘*’ target specifier. If all the minions return true, then you are good to continue. If any fail, then you have an issue somewhere. This is a good time, in turn, to discuss targeting hosts with salt.

Salt supports multiple methods of targeting, but the default is what they call “glob” targeting. This is shell-style globbing based around the minion id. You can also use this for targeting in top.sls.

In addition to globbing, you can also target based off of “grains.” Grains in salt are things like the minion architecture, OS, OS version, etc.

salt ‘*’ grains.items sanitize=TrueIn addition to grain and glob targeting, salt also has full POSIX RegEx support if you pass salt the –E option when triggering the salt command. Assuming you are reading this to improve your Splunk infrastructure, you should have some knowledge of how POSIX-based regular expressions work, and I will not be covering those in this document.

Remote execution, States, and you:

Salt can also be used to remotely execute commands as if issued from the CLI via the cmd.run module. To utilize this functionality, use whichever targeting method you would like, and then pass cmd.run “command”.

salt ‘*’ cmd.run “wget –o/tmp/splunk.rpm http://splunk.com/downloads/splunk-version-whatever.rpm”

# this could then be installed with:

salt ‘*’ cmd.run “rpm –ivh /tmp/splunk.rpm”(Editors note: that link does not work to download Splunk, it’s simply an example.)

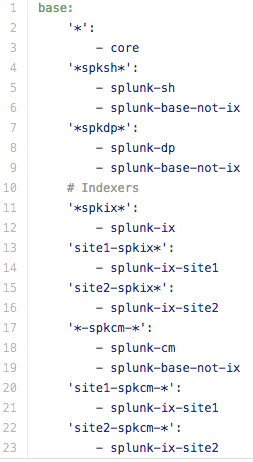

With the topic of remote execution covered, this brings us to the meat and potatoes of salt: State-based Splunk configuration management and command execution. Salt utilizes user-developed states to enforce configuration states on salt-controlled machines. Using the “state. Highstate” state, it will apply every state in `/srv/salt/top.sls` to the hosts targeted with glob or host-based targeting above every state listed.

If we look at the top.sls file to the left, we can see a few things.

- All servers get the core state

- Servers with spksh (Splunk search heads) get the splunk-sh state, and the Splunk-base-not-ix state

- the deployment servers get the DP state

- indexer states are separated into one per site, plus a blanket state for all indexers

- the CM states are divided the same way as the indexers, however the CM get the site indexer states as well to pick up the site definitions

The segmentation outlined in this enables modular editing of cluster configs based solely on what we are wanting to update down the line. If we need to modify search head configs, we only edit /srv/salt/splunk-sh/init.sls. For site agnostic indexer changes, /srv/salt/Splunk-ix/init.sls. The same goes for the other states as well.

You can also add your own states to the top.sls file as well. A useful state to add that I could think off of the top of my head would be a user management state for Splunk as well as for the *nix servers that our states and Splunk are running on.

Now that we’ve covered the execution of the highstate, what if we only want to apply one state, for instance, if only the search heads need to be updated, is there a way we could apply just the ‘splunk-sh’ state? The answer is yes, and we do that using the state.sls modifier for the salt command line.

salt ‘*spksh*’ state.sls splunk-shOne thing of note I will add. Never test your code in production. I know it may be tempting, but Saltstack makes it very easy to see exactly what your code is going to do before you actually apply it. Simply add test=True to the command and it will do a dry run of the command returning what changes would be made remotely, without actually making them.

salt ‘*spksh*’ state.sls splunk-sh test=TrueOutput processors, returners, and logging this data to Splunk

There are many ways to process the output salt provides. By default, it is in highstate output, but that can be easily changed. When you issue a command to salt, you can pass it the –output= switch, and have it dump the output in a variety of formats.

Supported formats are:

- python_pprint

- json

- raw

- yaml_out

- txt

- progress (just displays a progress bar)

- no_out (no output at all)

- newline_values_only (display values only, separated by newlines)

Since we are working with Splunk, I like to use JSON, as Splunk is very very good at native JSON parsing, and makes our lives easy for field extractions and gathering useful data from the log output.

By default, however, no matter how we output the data, its’ only output to our terminal, which leads us to an interesting predicament: How do we get the salt run output from an SSH terminal logged to Splunk’s log store? Well, there are a couple of ways. Ryan Currah has written a great blog post on using salts TCP_returner functionality to send the data to Splunk on a TCP input.

For our purposes, however, we are going to be utilizing the script he wrote that integrates with the Splunk HEC (HTTP Event Collector). If you run a new enough version of salt, however, Salt has added a splunk_http_forwarder return module to its base code for this.

The script I’m currently using for my Splunk ingestion is a modified version of his script that uses the HEC to absorb events into Splunk.

Installation of this is the same as the installation of the TCP_returner on his blogpost, so I won’t duplicate already written work. Give his blog a read, there’s a lot of good data on it.

Once you have it installed, you will need to enable the HEC on a Splunk instance, and generate a token. Once you have that add it to the /etc/salt/master file as outlined in the master Gist. Once that is in place, restart the salt-master, and you are ready to send your data to Splunk.

# To activate the Splunk http returner, we simply specify it from the command line with the returner=<returner> option. So using our command from before for json output, and appending our returner option we get:

salt ‘*spksh*’ state.sls splunk-sh –output=json returner=splunk_httpThis will take our pretty json output, and put it into Splunk where we can use Splunk to do all kinds of useful data transformations. I use this the most when I’m doing Splunk upgrades as I have a separate search head and indexer that all of my _internal, _audit, splunkd, and salt logs go to that is external to my normal cluster. By doing this I have the ability to track the progress of my upgrades across the cluster from within Splunk.

Conclusion:

Saltstack is a very powerful tool for Splunk professionals looking into Splunk configuration management. Without the use of salt, my cluster would not be nearly as reliable as it is and would be much more difficult to maintain. Through the use of external additions to salt, it is heavily and dynamically expandable to suit the needs of any organization.

Disclaimer:

The information contained in this paper is provided by the author and is provided for educational and informational purposes only. The author accepts no liability for any misuse or malicious use of this information.

About SP6

SP6 is a Splunk consulting firm focused on Splunk professional services including Splunk deployment, ongoing Splunk administration, and Splunk development. SP6 has a separate division that also offers Splunk recruitment and the placement of Splunk professionals into direct-hire (FTE) roles for those companies that may require assistance with acquiring their own full-time staff, given the challenge that currently exists in the market today.