Before I moved over to the PS world, I was a Splunk admin who thought he had it all figured out. I had been working with Splunk for almost three years before I made the jump and got hit with the realization that I didn’t know nearly as much as I thought I did. I was doing many of the things that I’ll reference below. Many of these things work, but they aren’t exactly the most efficient approach. And because they do work, they’ve grown into fake news over the years!

Hopefully, this blog will help you avoid some of the growing pains that I had. If you’re doing any of the things below, hopefully, we will be able to give you an idea of how to better approach your own environment. But if all else fails, please don’t hesitate to reach out to us for Splunk professional services help.

Splunk Myth 1: A Heavy Forwarder is More Effective Than a Universal Forwarder

We could honestly do an entire post on this concept alone but the fact of the matter is, very rarely is a Heavy Forwarder (HF) more useful than a Universal Forwarder (UF). If you don’t know the difference, a Heavy Forwarder is an entire Splunk package with indexing turned off. Its only function is to forward data. A Universal Forwarder is along the same lines. It is a much smaller package that does not have the web UI that the Heavy Forwarder has. More often than not, we will see people who use a Heavy Forwarder as an intermediate forwarder and this is usually contrary to best practices. Unless it is syslog data, it is better to avoid an aggregate layer if possible. An aggregate layer creates a data funnel if not properly done. But if you need an aggregate layer, make sure you opt for the Universal Forwarder.

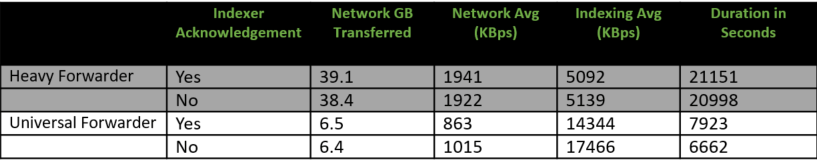

In many cases, a Heavy Forwarder is actually much more intensive on network IO. This is because a Heavy Forwarder is taking part in the indexer’s job and is actually parsing the data, and contrary to popular belief, this does not reduce CPU utilization on the indexers. In fact, the amount of data forwarded over the network is approximately SIX TIMES higher when forwarding data from a Heavy Forwarder instead of a Universal Forwarder. Do yourselves a favor, keep your network admin happy, and avoid using a Heavy Forwarder where you can.

There are generally only two use cases where we would ever recommend someone to use a Heavy Forwarder over a Universal Forwarder. The most common being when you want to use a heavy add-on like DB Connect, Opsec LEA, etc, and the other being when you need to forward logs to a third party. The Universal Forwarder cannot do either of the aforementioned functions. More specifically, these heavy add-ons typically make us of Splunk python binaries that otherwise don’t exist in a UF package.

Splunk Myth 2: Always Place Your Config Files in etc/system/local

Splunk’s ability to scale is what attracts so many people to the product. It scales virtually better than anyone else in the marketspace. I know you’re probably wondering what the system/local directory has to do with scalability and believe it or not, it has a lot to do with it. When it comes to system precedence within Splunk, the system/local directory always wins. The reason this affects scalability is that the local directory CANNOT be remotely managed.

For example, if you’ve ever manually installed a forwarder on a Windows server, you’ve probably noticed that the install instructions ask you to set the deployment client and forwarding server during the install. These values are entered into the etc/system/local directory upon install and cannot be remotely changed. Instead, do not enter any of these values when doing an install on a windows server. Leave these values blank and use the following approach to set the appropriate configurations.

The best approach is to create custom applications that contain your configuration files. If you manage your config files via applications, you can use your deployment server to remotely change configuration files on hundreds of thousands of forwarders in a matter of minutes. If you just stood up a new indexer and now you have the task of updating the Outputs.conf on your Universal Forwarders, if you follow this approach, your week-long project just became a 15-minute task.

Splunk Myth 3: Sending TCP/UDP Syslog Data Directly to Indexers

Earlier, we talked about why you should avoid using an aggregate layer before indexing your data. Now we’re going to flip the script a bit. When it comes to syslog data, you want to use an aggregate layer. Often, we will see customers who send syslog data either directly to indexers or via a third-party load balancer like Netscaler.

This is a risky approach because it can have a negative effect on your load balancing. The obvious implication of sending directly to indexers is that there is absolutely no load balancing going on. But when using a third-party load balancer, what can happen is the load balancer won’t switch often enough, or large streams of data could get stuck. Essentially, Splunk knows how to break the data, a third-party load balancer does not. The third-party load balancer could switch early before an entire event makes it to the indexer. In the Splunk world, you want to distribute your indexing as much as possible. Storage is expensive so the more distribution, the better. Consider this if you send your data directly to indexers if you have to restart any one of your indexers, the data that was sent to that box during restart is lost. Then there’s the potential data loss during restarts, and the inability to filter noisy data. Instead, consider standing up a dedicated syslog log server (like Syslog-ng). I can’t stress enough how critical this is to Splunk. Then deploy a Universal Forwarder to your syslog server. The Universal Forwarder -> Indexer flow of traffic is the ideal scenario whether it’s syslog you’re working with or reading files from a Windows server, Linux server, what have you.

Splunk Myth 4: Data Onboarding is Fire and Forget

There isn’t too much to say on this topic. When you are onboarding data, there are six settings you should ALWAYS set in your props.conf, despite whether Splunk is parsing the data correctly or not, TIME_PREFIX, MAX_TIMESTAMP_LOOKAHEAD, TIME_FORMAT, SHOULD_LINEMERGE, LINE_BREAKER, and TRUNCATE.

The approach many people take is that if Splunk is parsing and breaking events on its own, there is no need to set your props.conf. Splunk relies heavily on accurate timestamps and accurate event breaking. You always want to test your data before onboarding in a production environment to ensure that these are accurate.

Even if Splunk is accurately doing these things, you still want to set your props with the six settings mentioned earlier. The more you can do to tell Splunk where to look for these required items, the more accurate, and the more efficient your Splunk instance will run. However, it is worth mentioning that you should use TAs (technical add-ons) where available because they will typically handle this process for you. Most TAs will contain a prop and transforms that eliminates the bulk of the legwork for you, although this isn’t guaranteed so make sure you check the content of your TAs.

Splunk Myth 5: The More Indexes, The Better

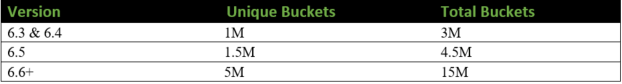

More often than not, you will see Splunk administrators who don’t really plan out their indexes. In many cases, we will see indexes created on a per sourcetype basis. Not only is this an overkill approach that makes management much more cumbersome, but it can also cause performance degradation and in some extreme cases, even data loss. This is because, in a clustered environment, there are limitations on how many buckets a cluster manager can manage.

You also have to consider that your data retention and role-based access are all index-based. This is where the management of so many indexes becomes difficult and cumbersome. The ideal approach to planning your indexes should revolve around the two aforementioned aspects. You should also consider these items when planning your indexes:

- Data that is commonly searched together can more than likely be grouped together.

- Organize your indexes by the ownership group. For example, set your index to a superseding general term like “index=network”.

- From there, group logs from your firewalls, switches, routers, etc. by their corresponding sourcetype under your “network” index.

- This approach can also alleviate your role-based access requirements as your indexes will be grouped by the team who owns the data, for the most part.

The bottom line? Keep it simple. Take the time to logically plan out your indexes prior to your deployment. Once your data is indexed, there is no do-over so it is definitely worth the extra effort and attention.

Conclusion

Splunk is a robust product and there are tons of different ways to oil the gears, but it’s worth extra effort to go through Splunk Docs. Try to understand the difference between A WAY to do things, and the RIGHT WAY to do things. There are tons of myths and misconceptions around the best way to do things when it comes to configuring your Splunk environment. For what it’s worth, every environment is different. What works in your environment, may not work in the next person, but there are some things Splunk admins should try to avoid if possible. But if all else fails, don’t hesitate to reach out to SP6 for Splunk Professional Services assistance.

About SP6

SP6 is a Splunk consulting firm focused on Splunk professional services including Splunk deployment, ongoing Splunk administration, and Splunk development. SP6 has a separate division that also offers Splunk recruitment and the placement of Splunk professionals into direct-hire (FTE) roles for those companies that may require assistance with acquiring their own full-time staff, given the challenge that currently exists in the market today.