We’ll admit it: There are times we’ve been bitten by simple omissions we made laying the foundation for a Splunk environment.

Getting that late-night call that Splunk is down is always frustrating, but it’s even worse when you realize the problem could’ve been prevented during the initial Splunk deployment.

With that in mind, here are three Splunk best practices that will save you time (and face) and allow you to enjoy your evenings in peace.

Splunk Best Practice #1: Use Volumes to Manage Your Indexes

We’ll assume you’re already familiar with the maxTotalDataSizeMB setting in the indexes.conf file — it’s used to set the maximum size per index (default value: 500 GB).

While maxTotalDataSizeMB is your first line of defense to avoid reaching the maximum free disk space before indexing halts, volumes will protect you from any miscalculations made when creating a new index. Once you enter volumes, you can bind indexes together and ensure that they don’t surpass the limits set.

Even if you’ve diligently sized your indexes to account for the right growth, retention, and space available, you can’t guarantee that another admin who creates an index in your absence will do the same.

So, how does using volumes to manage your indexes in Splunk work?

Volumes are configured via indexes.conf, and they require a very simple stanza:

[volume:CustomerIndexes] path = /san/splunk

maxVolumeDataSizeMB = 120000In the example above, the stanza tells Splunk we want to define a volume called “CustomerIndexes.”

In addition, we want it to use the path “/san/splunk” to store the associated indexes. Finally, we want to limit the total size of all of the indexes assigned to this volume to 120,000 MB.

The next step is to assign indexes to this “CustomIndexes” volume. This is also done in indexes.conf by prefixing your index’s cold and warm (home) path with the name of the volume:

[AppIndex] homePath = volume:CustomerIndexes/AppIndex/db

coldPath = volume:CustomerIndexes/AppIndex/colddb

thawedPath = $SPLUNK_DB/AppIndex/thaweddb

[RouterIndex] homePath = volume:CustomerIndexes/RouterIndex/db

coldPath = volume:CustomerIndexes/RouterIndex/colddb

thawedPath = $SPLUNK_DB/RouterIndex/thaweddb*PRO TIP: Use $_index_name to reference the name of your index definition.

[RouterIndex] homePath = volume:CustomerIndexes/$_index_name/db

coldPath = volume:CustomerIndexes/$_index_name/colddb

thawedPath = $SPLUNK_DB/$_index_name/thaweddbWhy this approach?

Though the next admin might be unaware, once you set it, it’s hard to miss when creating a new index via indexes.conf or the web interface. By using volumes, the volume’s “maxVolumeDataSizeMB” setting overrides the index’s “maxTotalDataSizeMB” setting.

If left to their own devices, the AppIndex and RouterIndex would grow to their default maximum size of 500,000 MB each, taking up a total of 1 TB of storage. With volumes, we no longer have to worry about this.

As a bonus, you can also use separate volumes for cold and warm/hot buckets to correspond with different storage tiers.

Splunk Best Practice #2: Use Apps and Add-Ons Wherever Possible

As cliché as it sounds, in the Splunk world, it pays to be the admin that says, “There’s an app for that.”

Sure, you can use apps from Splunkbase to simply extend Splunk’s functionality, or use the deployment server to manage inputs and technical add-ons (TAs) on universal forwarders.

But what about using apps to manage an environment?

It’s not only possible — it’s recommended.

To do this, let’s first assume you have all of your Splunk nodes configured to use your deployment server. If not, you can use this handy command-line interface (CLI) command to do that on each instance:

splunk set deploy-poll <IP_address/hostname>:

splunk restartAfter you’ve done that, continue by identifying stanzas that will be common across groups of nodes (search heads, indexers, forwarders, etc.) or all nodes.

For example, consider these two useful stanzas in outputs.conf that make sure every node is aware of the indexers it needs to forward data to outputs.conf:

[tcpout] defaultGroup=Indexers

[tcpout:Indexers] server=IndexerA:9997, IndexerB:9996Deployment server directory structure

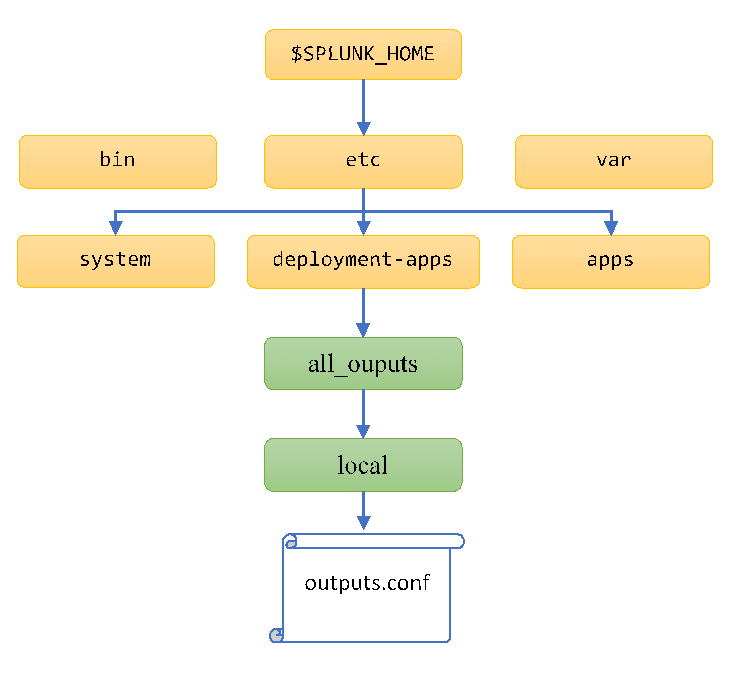

Next, create the following directory structure on your deployment server to accommodate your new app’s config files. You may then place your version of outputs.conf file with the stanzas above in the “local” subdirectory.

In this example, we’re naming our app “all_outputs.”

Congratulations — you’ve completed the hard part!

Now, go ahead and repeat this exercise, creating a new app on the deployment server for every config file that a group of nodes has in common. Here are a few ideas:

- All search heads usually share the same search peers, and this can be accomplished via an app that provides distsearch.conf

- Indexers will need to have the same version of props.conf and transforms.conf to consistently parse the data they ingest

- Forwarders can use an app configuring the allowRemoteLogin setting via server.conf, allowing them to be managed remotely

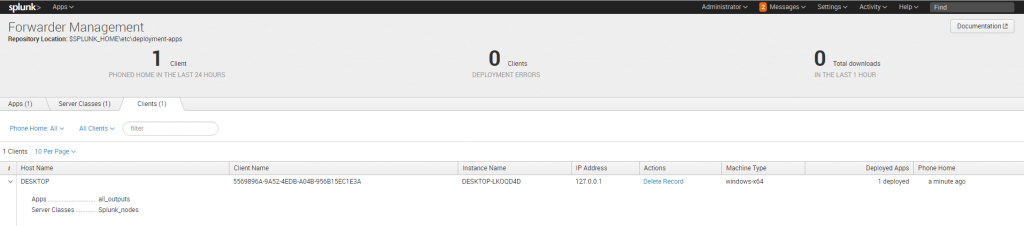

To tie everything together, log on to your deployment server’s GUI and go to Settings > Forwarder Management. Create server classes for the different groups of nodes in your Splunk environment. Assign the appropriate apps and hosts to each server class.

Now, the next time someone asks you how to stand up a new heavy forwarder (or any other instance type), you can answer, “There’s an app for that.”

Splunk Best Practice #3: Keep an Eye on Free Disk Space

We know from experience that Splunk frequently checks the free space available on any partition that contains indexes. It also looks for enough free space where the search dispatch directory is mounted before executing a search (usually wherever Splunk is installed).

By default, the threshold is set at 5,000 MB and configurable by the “minFreeSpace” on server.conf. When it’s reached, expect a call from your users informing you Splunk has stopped indexing or that searches are not working.

You should start to keep a close eye on your disk space when your instance is running on a partition with less than 20 GB available. This is because Splunk will use several GB for its own processes.

It can be difficult to pinpoint where exactly the areas of growth in an environment will be as several directories grow according to the daily use of Splunk not governed by the limits set on indexes or volumes.

Nevertheless, here are the top places to look for growth in an environment:

- Dispatch directory ($SPLUNK_HOME/var/run/splunk/dispatch)

- KV store directory ($SPLUNK_DB/kvstore)

- Configuration bundle directory ($SPLUNK_HOME/var/run/splunk/cluster/remote-bundle)

- Knowledge bundle directory ($SPLUNK_HOME/var/run/searchpeers)

To avoid any surprises with your disk space, we recommend setting up a low-disk-space alert in your monitoring tool of choice.

Get More Out of Splunk with SP6

Want to improve your Splunk usage beyond these three Splunk best practices?

Schedule a consultation with us today to learn how our elite-rated Splunk team can make your data more secure, compliant, and performant.

About SP6

SP6 is a niche technology firm advising organizations on how to best leverage the combination of big data analytics and automation across distinct (3) practice areas:

- Cybersecurity Operations and Cyber Risk Management (including automated security compliance and security maturity assessments)

- Fraud detection and prevention

- IT and DevOps Observability and Site Reliability

Each of these distinct domains is supported by SP6 team members with subject matter expertise in their respective disciplines. SP6 provides Professional Services as well as ongoing Co-Managed Services in each of these solution areas. We also assist organizations in their evaluation and acquisition of appropriate technology tools and solutions. SP6 operates across North America and Europe.